CRC Part 2: Templates vs Performance

In CRC Part 1, we created an ideal function for computing the CRC-32C value for a range of data.

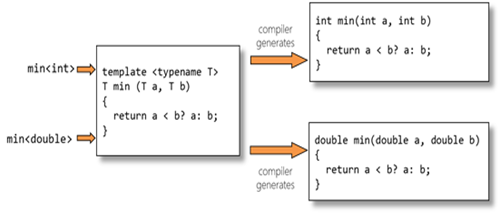

Can we create a template function without losing performance? Many people are under the impression that in C++, use of templates does not have a runtime performance penalty. The C++ FAQ claims "the compiler ends up figuring out the types of everything, and then doing all the usual nifty C++ optimizations." While this is true for many a toy example, generic code in the real world subjects the compiler to a number of burdens which often make those nifty optimizations less automatic than you might expect. Implementation B (using std::for_each) is one such toy example.

When thinking about writing a generic function or type, I find it best to start with some non-generic basis and build from there. The most obvious reason for doing this is because it is easier to write correct non-generic code; you never know when someone will do something ridiculous like overload unary operator& or provide T as a bool when your algorithm uses a std::vector<T, Alloc>. Furthermore, setting breakpoints on the function foo is significantly easier than trying to inform GDB the Lovecraftian horror of name mangling the equivalent template <typename T> foo() might become.

E: Generic C

The first question to ask is: What is pattern underlying crc32c which is applicable to other functions? The series of steps is pretty simple:

- Perform some initialization --

code = ~0 - For all data in input:

- Attempt to process the largest chunk --

crc32q - Fallback smaller by chunk --

crc32l,crc32w,crc32b

- Attempt to process the largest chunk --

- Perform finalization --

return ~code

This pattern is applicable to all sorts of SSE-type functions. When counting bits, one could use popcnt, which is offered in both 32- and 64-bit varieties. For randomly filling a buffer, you could use rdrnd, which comes in 16- and 64-bit modes. Even in cases where there is not a specific CPU extension to assist you, most algorithms see a performance benefit by loading more than 1 byte at a time. If you're writing memset or memcpy, writing out larger chunks is faster than the naïve byte-by-byte implementation.

Steps 1 & 3 cannot be made generic very easily, nor should they; regular code works perfectly fine for the variety of possible things you might consider "initialization" or "finalization." There is no common structure to these steps; it is completely arbitrary. We will spend our effort making steps 2.1 and 2.2 easier to use.

The top-level step 2 looks very similar to std::for_each, so that seems like a fine goal to work towards (keep your constructs familiar). We have only a couple more requirements. The most obvious one is since we want to take a different action for the varying chunk sizes, we have to provide the algorithm with multiple functions. This is accomplished by making the UnaryFunction parameter variadic. The second change is we assume an 8-byte aligned std::addressof(iter[0]) implies we can read the entire 8 bytes of memory as a single chunk, meaning we require a ContiguousIterator. A ContiguousIterator is also a RandomAccessIterator, which is convenient for jumping over sections of input by chunk (we could fake this with std::advance, but we're already stuck with something contiguous).

template <typename TContiguousIterator, typename... UnaryFunction>

void for_each_aligned(TContiguousIterator first, TContiguousIterator last, UnaryFunction... f);

Usage would look something like this:

for_each_aligned(first, last,

[&] (std::uint64_t x) { code = _mm_crc32_u64(code, x); },

[&] (std::uint32_t x) { code = _mm_crc32_u32(code, x); },

[&] (std::uint16_t x) { code = _mm_crc32_u16(code, x); },

[&] (std::uint8_t x) { code = _mm_crc32_u8 (code, x); }

);

This unwritten for_each_aligned function is taking our 4 lambdas and automatically inferring alignment from their operator()s. This is possible to do, but writing it would require template shenanigans which would detract from the point of this post. If you want to implement such a thing yourself, boost::function_traits is a great place to start.

Instead, we are going to take the lazier route and only give people const char*s and require users explicitly specify the desired alignment. But what to name it? std::destroy_at vs std::destroy is the closest thing to a pattern I can find in the C++ Standard Library; in contrast to _destroy_ing a range of values, we will perform that action at a point in memory.

template <std::size_t... ByteAlign, typename TChar, typename... UnaryFunction>

void at_each_aligned(TChar* first, TChar* last, UnaryFunction... f);

Why take TChar and accept TChar* instead of a proper TContiguousIterator? The short answer is: I'm lazy. I want to write reinterpret_cast<std::uintptr_t>(ptr) instead of the reinterpret_cast<std::uintptr_t>(std::addressof(*iter)) required for correctly dealing with crazy iterators. Hopefully that helps to make this slightly more readable.

In an ideal world, I would be able to write the at_each_aligned function like this:

template <std::size_t... ByteAlign, typename TChar, typename... UnaryFunction>

void at_each_aligned(TChar* first, TChar* last, UnaryFunction... f)

{

for ( ; first < last; /* inline */)

{

(if (reinterpret_cast<std::uintptr_t>(first) % ByteAlign == 0 && first + ByteAlign <= last)

{

f(first);

first += ByteAlign;

}

else)...

{ }

}

}

Unfortunately, template parameter packs can only be expanded in a certain specific contexts for expressions. A pack expansion which spans multiple statements is definitely not in the list of acceptable things to do (the if/else is an especially subtle source of error). We could attempt to merge all this into a single expression, taking advantage of short-circuiting or and the comma operator, but the resulting code would probably be frowned upon by reasonable human beings. So let's go with the classic solution: recursion!

namespace detail

{

template <std::size_t... ByteAlign>

struct at_each_aligned_e_impl;

template <std::size_t ByteAlign, std::size_t... ByteAlignRest>

struct at_each_aligned_e_impl<ByteAlign, ByteAlignRest...>

{

/** Step forward by the largest possible stride and return the next address to process. **/

template <typename TChar, typename FApply, typename... FApplyRest>

static TChar* step(TChar* first, TChar* last, const FApply& apply, const FApplyRest&... apply_rest)

{

if (reinterpret_cast<std::uintptr_t>(first) % ByteAlign == 0

&& first + ByteAlign <= last

)

{

apply(first);

return first + ByteAlign;

}

else

{

return at_each_aligned_e_impl<ByteAlignRest...>::step(first, last, apply_rest...);

}

}

};

// Base case: We must be capable of processing 1 byte at the front or the end.

template <>

struct at_each_aligned_e_impl<1>

{

template <typename TChar, typename FApply>

static TChar* step(TChar* first, TChar*, const FApply& apply)

{

apply(first);

return first + 1;

}

};

template <std::size_t... ByteAlign, typename TChar, typename... FApply>

void at_each_aligned_e(TChar* first, TChar* last, FApply&&... transform)

{

// The controlling function should look a lot like C from part 1

for ( ; first < last; /* inline */)

{

first = at_each_aligned_e_impl<ByteAlign...>::step(first, last, transform...);

}

}

}

template <std::size_t... ByteAlign, typename... FApply>

void at_each_aligned_e(const char* first, const char* last, FApply&&... transform)

{

return detail::at_each_aligned_e<ByteAlign...>(first, last,

std::forward<FApply>(transform)...

);

}

This generic at_each_aligned_e algorithm can be used in our CRC function with a few lambdas (decorated with some __attribute__ ugliness).

__attribute__((target("sse4.2")))

std::uint32_t crc32e(const char* first, const char* last)

{

std::uint32_t code = ~0U;

at_each_aligned_e<8, 4, 2, 1>

(

first, last,

[&] (const char* p64) __attribute__((target("sse4.2")))

{

code = _mm_crc32_u64(code, *reinterpret_cast<const std::uint64_t*>(p64));

},

[&] (const char* p32) __attribute__((target("sse4.2")))

{

code = _mm_crc32_u32(code, *reinterpret_cast<const std::uint32_t*>(p32));

},

[&] (const char* p16) __attribute__((target("sse4.2")))

{

code = _mm_crc32_u16(code, *reinterpret_cast<const std::uint16_t*>(p16));

},

[&] (const char* p8) __attribute__((target("sse4.2")))

{

code = _mm_crc32_u8 (code, *reinterpret_cast<const std::uint8_t*> (p8));

}

);

return ~code;

}

It isn't pretty, but it gets the job done. So how does it perform?

Ouch. Our generic version performs worse across the board. To figure out why, let's take a look at the disassembly of the crc32e function.

_Z6crc32ePKcS0_:

sub $0x58,%rsp

cmp %rsi,%rdi

mov %rdi,%rcx

lea 0xc(%rsp),%rax

movl $0xffffffff,0xc(%rsp)

mov %rsi,%r8

mov %rax,0x40(%rsp)

mov %rax,0x30(%rsp)

mov %rax,0x20(%rsp)

mov %rax,0x10(%rsp)

jne 40186f <_Z6crc32ePKcS0_+0x6f>

jmpq 4018cf <_Z6crc32ePKcS0_+0xcf>

nop

nopw %cs:0x0(%rax,%rax,1)

test $0x3,%cl

jne 40184e <_Z6crc32ePKcS0_+0x4e>

lea 0x4(%rcx),%r9

cmp %r9,%r8

jae 4018b0 <_Z6crc32ePKcS0_+0xb0>

test $0x1,%cl

jne 40185c <_Z6crc32ePKcS0_+0x5c>

lea 0x2(%rcx),%r9

cmp %r9,%r8

jae 4018c0 <_Z6crc32ePKcS0_+0xc0>

lea 0x40(%rsp),%rdi

callq 401710 <_ZZ6crc32ePKcS0_ENKUlS0_E2_clES0_>

add $0x1,%rcx

cmp %rcx,%r8

je 4018a0 <_Z6crc32ePKcS0_+0xa0>

test $0x7,%cl

mov %rcx,%rsi

jne 401840 <_Z6crc32ePKcS0_+0x40>

lea 0x8(%rcx),%r9

cmp %r9,%r8

jb 401840 <_Z6crc32ePKcS0_+0x40>

lea 0x10(%rsp),%rdi

callq 401730 <_ZZ6crc32ePKcS0_ENKUlS0_E_clES0_>

mov %r9,%rcx

cmp %rcx,%r8

jne 40186f <_Z6crc32ePKcS0_+0x6f>

nopl 0x0(%rax)

nopw %cs:0x0(%rax,%rax,1)

mov 0xc(%rsp),%eax

not %eax

add $0x58,%rsp

retq

nopl 0x0(%rax,%rax,1)

lea 0x20(%rsp),%rdi

callq 401750 <_ZZ6crc32ePKcS0_ENKUlS0_E0_clES0_>

mov %r9,%rcx

jmp 40186a <_Z6crc32ePKcS0_+0x6a>

nop

lea 0x30(%rsp),%rdi

callq 4017f0 <_ZZ6crc32ePKcS0_ENKUlS0_E1_clES0_>

mov %r9,%rcx

jmp 40186a <_Z6crc32ePKcS0_+0x6a>

xor %eax,%eax

jmp 4018a6 <_Z6crc32ePKcS0_+0xa6>

nop

xchg %ax,%ax

nopw %cs:0x0(%rax,%rax,1)

It is structurally similar to crc32c (as we would expect), but there is one major difference. This has 4 callq instructions, all jumping to functions named things like _ZZ6crc32ePKcS0_ENKUlS0_E_clES0_, which is the friendly mangled name for crc32e(char const*, char const*)::{lambda(char const*)#1}::operator()(char const*) const. Instead of inlining our lambda functions as we would hope for, GCC is performing a function call. This might not be a huge deal for many cases, but since the goal of the lambda functions is to emit a single instruction (crc32q, crc32l, etc), the function call overhead really hurts us.

F: Inline E

The key issue is the recursion. Getting to crc32q is a few function calls deep; crc32b is 7 levels deep. This is just too much for GCC to automatically flatten, so we need to help it out a bit.

Unfortunately, the inline keyword will not do what we want, because the inline specifier has very little to do with inlining code and more to do linkage specification (inline is in the Gallery of Misunderstood C++ Keywords). Luckily, GCC has a function attribute called always_inline, which tells it to do exactly what we want.

template <>

struct at_each_aligned_f_impl<1>

{

template <typename TChar, typename FApply>

__attribute__((always_inline)) inline

static TChar* step(TChar* first, TChar*, const FApply& apply)

{

apply(first);

return first + 1;

}

};

Nice. Our generic algorithm achieves parity with the non-generic version, but now we have a useful building block for quickly writing SIMD-utilizing functions.

G & H: Generic D & Inline G

If you look at the source code for this post, you wil find algorithms G and H. G is the generic version of algorithm D, while H is the inline version of G. The performance characteristics are the same as the C vs E vs F for the same reasons, so I'm just going to leave the chart here and call it a day.

Summary

Here is the ultimate graph of every algorithm (even those included only in the source code):

C++ implementations obey the zero-overhead principle: What you don’t use, you don’t pay for. What you do use, you couldn’t hand code any better.

- Bjarne Stroustrup

In C++, facilities like templates provide us with the ability to write abstracted code without overhead. It is a requirement for a modern, high-performance systems language. But we must be careful; sometimes the code we must write in order to achieve these abstractions incurs unexpected overhead. What's worse is this cost can vary between compiler vendors and versions. Test your software on a regular basis!