Home NAS with Ubuntu and ZFS

My little adventure in building the ultimate home NAS using Ubuntu and ZFS. Let's get right into the hardware specs:

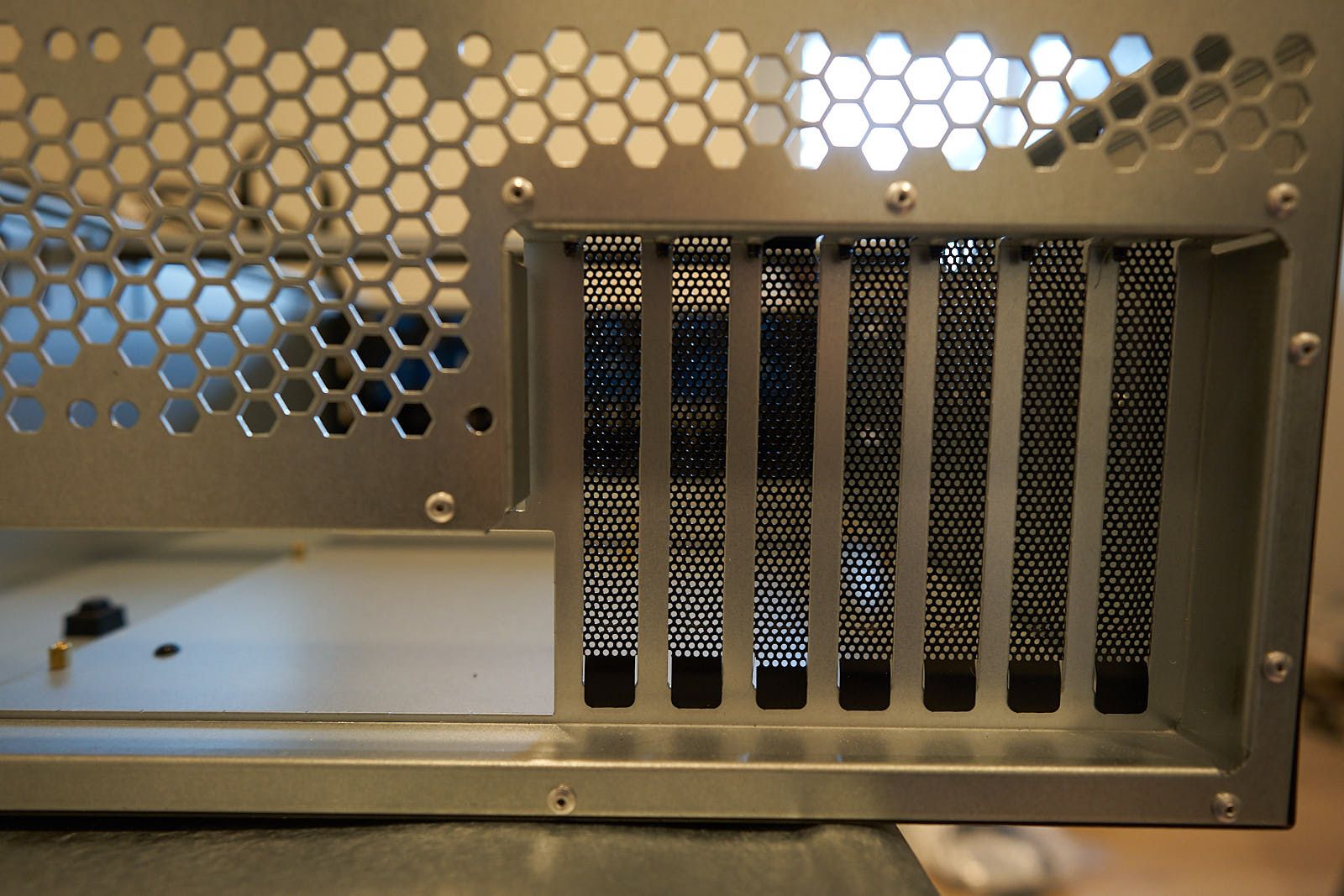

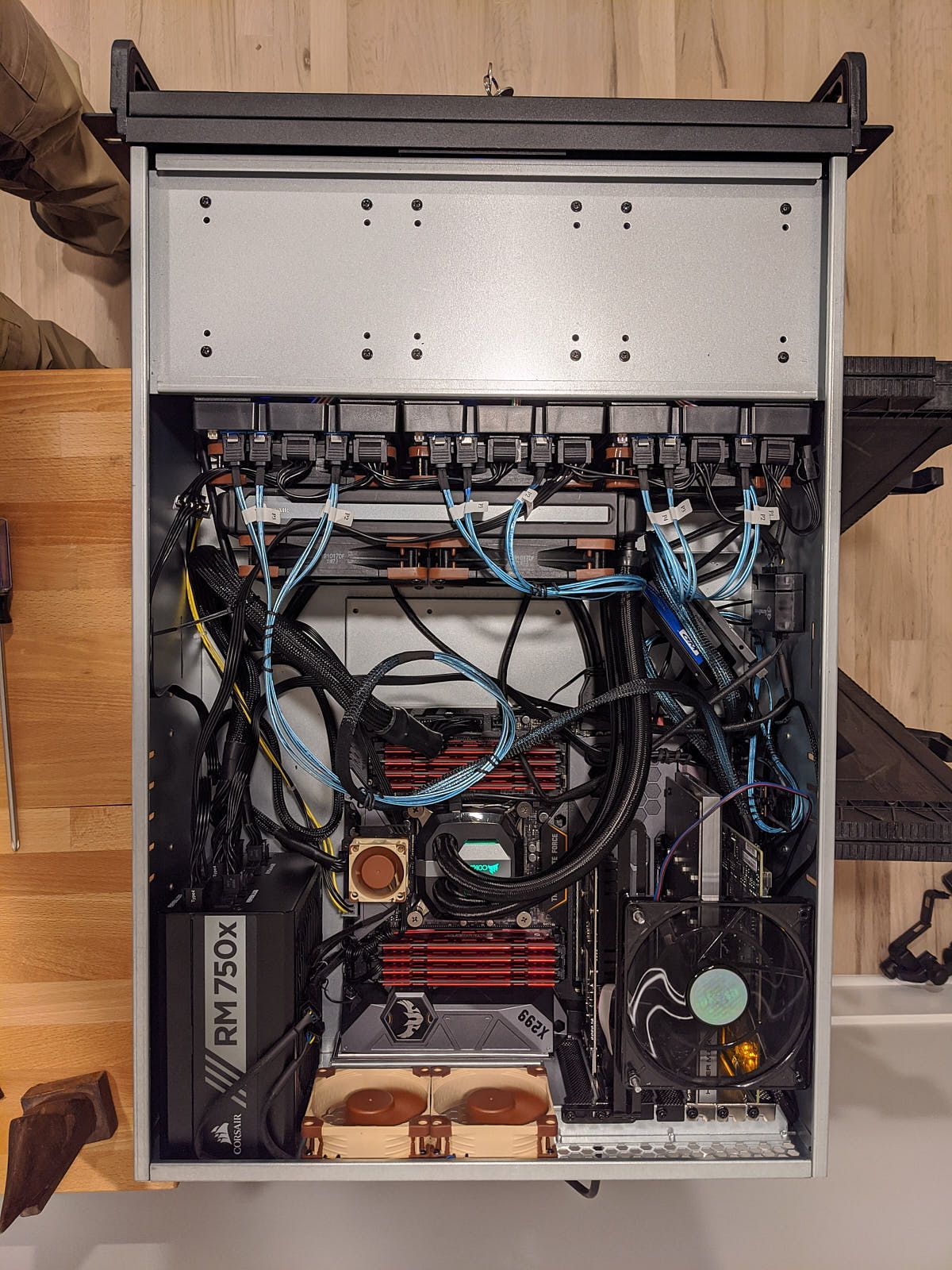

- Rosewill RSV-L4312 Chassis with 12 3.5-inch hotswap bays

- Intel Core i7-9800X (overclocked to 4.7Ghz)

- 128GB Crucial Ballistix Sport LT 2666 MHz DDR4 DRAM

- Corsair H100i v2 AIO CPU Cooler

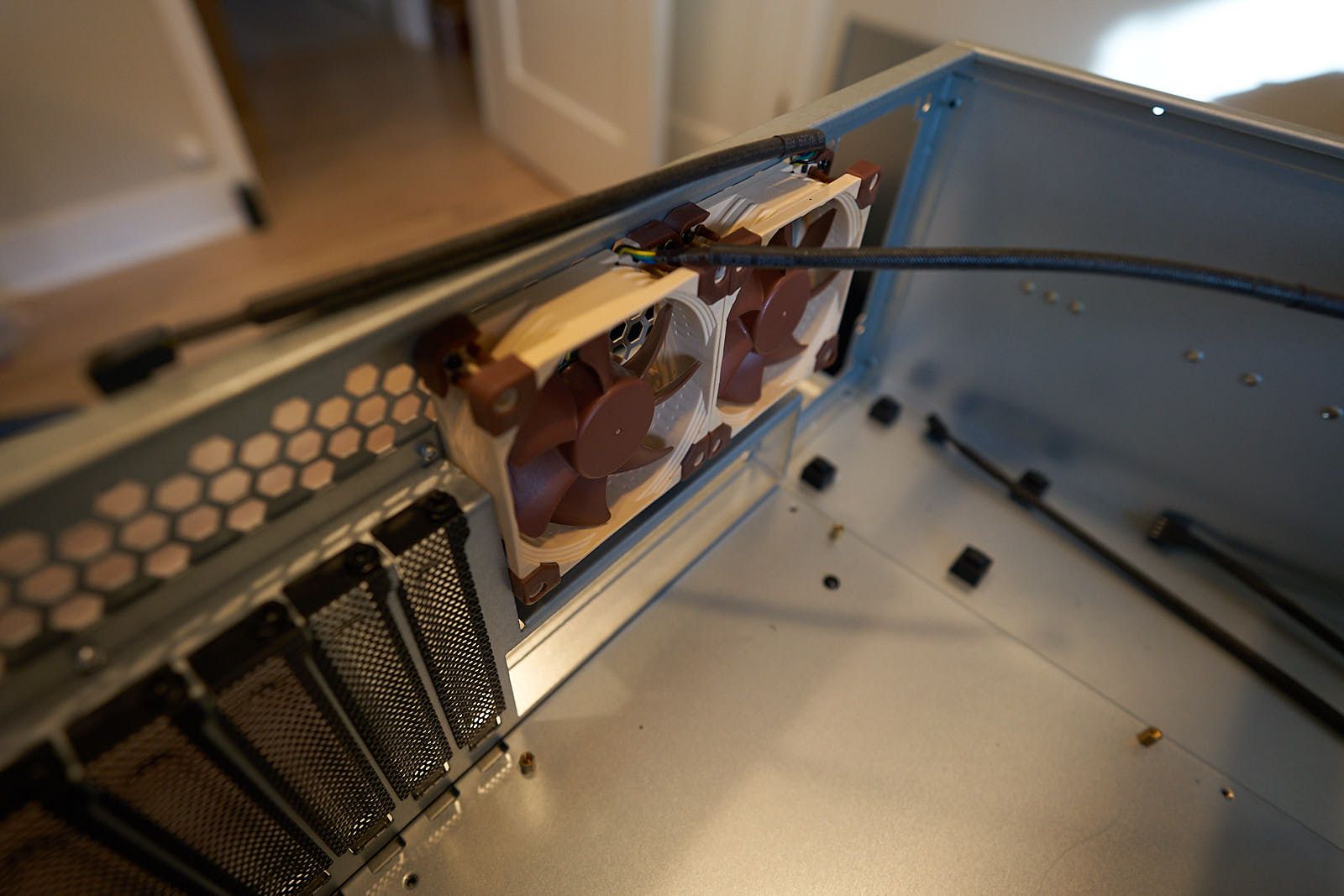

- Noctua NF-F12 industrialPPC-3000 PWM 120mm fans x5 (intake and radiator)

- Noctua NF-A14 industrialPPC-3000 PWM (PCIe)

- Noctua NF-A8 PWM x2 (exhaust)

- Silverstone 8-Port PWM Fan Hub/Splitter

- ASUS X299 TUF Mark 1 Motherboard

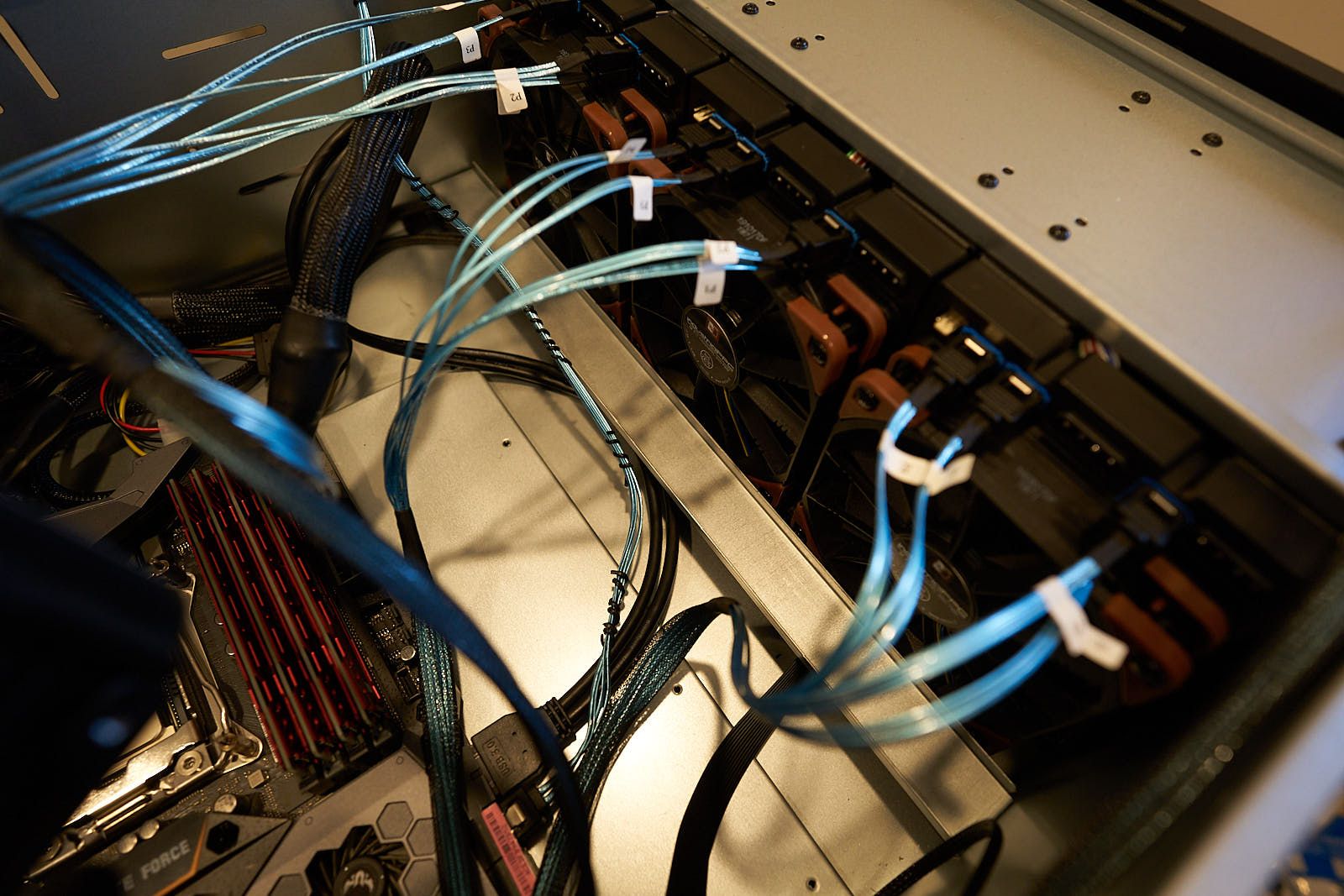

- ASUS Hyper Card v2

- LSI 9201-16i HBA

- Cable Matters Mini SAS (SFF-8087) to SATA Forward Breakout cables

- Seagate EXO x10 10TB (ST10000NM0086) HDD x12

- Western Digital Blue 3D NAND SSD 250GB (OS Software RAID-1)

- Samsung 970 Pro m.2 NVMe SSD x2 (ZFS l2arc cache)

- Samsung 970 EVO Plus m.2 NVMe SSD x4 (ZFS l2arc cache)

- Mellanox MCX311A-XCAT ConnectX-3 10Gbe SPF+ Network Adapter

- ZOTAC GeForce GT 710

- Startech 6U Vertical Wall mount

Chassis

Initially, I chose the iStarUSA M-4160-ATX, but all 4 backplanes were DOA. I really, really wanted this chassis to work, the 16 hotswap bays + 2x2.5 inch bays was ideal for my setup. But at $600+ USD and all 4 backplanes DOA, I didn't trust a replacement. I found the Rosewill RSV-L4312 on newegg.com as an open box buy at $150 USD so I decided to try it.

The Rosewill had 4 less front bays and no internal bays for OS drives. Bummer. But the Rosewill fit larger motherboards, E-ATX vs ATX, and I was able to fit my Corsair H100i v2 AIO cooler inside, which was a bonus as I was able to keep my overclock settings. Something I had to dial back when I tried a Noctua NH-U9DX i4 4U air cooler.

Water Cooling

Water cooling was a nice bonus. The main support brace had a little groove in it that actually fit the H100i v2 radiator perfectly!

I just drilled new holes and mounted the brace upside down. I have emailed Rosewill parts department asking for another brace so I can clamp it down from the top; otherwise, I will have to manufacture some sort clamp or maybe just use some extra strength rubber bands to hold the radiator securely.

ZFS

Unlike Linus, Ubuntu + ZFS is awesome. I have 12 10TB disks, 11 in raidz3 with 1 hotspare, 6 1TB NVMe m.2 SSDs for l2arc cache. I have read articles saying l2arc cache can slow you down, but with using 10GB SFP+ connections and 4K video editing with Davinci Resolve 16 using network drives, the l2arc cache really makes a difference. I guess it would really depend on your workload. For things like Plex, I use a ZFS dataset in which l2arc is only used for metadata, as the sequential read once workload does not make a lot of sense to fill the l2arc cache.

$ zfs set secondarycache=metadata vol0/plex

Devices

Device Model Firmware Temperature /dev/nvme0 Samsung SSD 970 PRO 1TB 1B2QEXP7 23°C /dev/nvme1 Samsung SSD 970 PRO 1TB 1B2QEXP7 25°C /dev/nvme2 Samsung SSD 970 EVO Plus 1TB 2B2QEXM7 26°C /dev/nvme3 Samsung SSD 970 EVO Plus 1TB 2B2QEXM7 27°C /dev/nvme4 Samsung SSD 970 EVO Plus 1TB 2B2QEXM7 27°C /dev/nvme5 Samsung SSD 970 EVO Plus 1TB 2B2QEXM7 27°C /dev/sda ST10000NM0086-2AA101 SN05 25°C /dev/sdb ST10000NM0086-2AA101 SN05 26°C /dev/sdc ST10000NM0086-2AA101 SN05 26°C /dev/sdd ST10000NM0086-2AA101 SN05 26°C /dev/sde ST10000NM0086-2AA101 SN05 24°C /dev/sdf ST10000NM0086-2AA101 SN05 24°C /dev/sdg ST10000NM0086-2AA101 SN05 24°C /dev/sdh ST10000NM0086-2AA101 SN05 24°C /dev/sdi ST10000NM0086-2AA101 SN05 24°C /dev/sdj ST10000NM0086-2AA101 SN05 25°C /dev/sdk ST10000NM0086-2AA101 SN05 24°C /dev/sdl ST10000NM0086-2AA101 SN05 24°C /dev/sdm WDC WDS250G2B0A-00SM50 401000WD 28°C /dev/sdn WDC WDS250G2B0A-00SM50 401000WD 27°C

Using 2 m.2 slots on motherboard + 4 m.2 slots on ASUS Hyper Card v2 for 6 SSD l2arc cache. One of my Seagate drives had older firmware, but I was able to update via command line using Seagate tools from support site.

OS drives are just some generic Western Digital Blue SSDs in software raid-1, they don't see much action, so I am not worried about SSD wear.

IO udev Rules

/etc/udev/rules.d/99-schedulers.rules

ACTION=="add|change", KERNEL=="sd[a-z]", ATTRS{model}=="ST10000NM0086-2A", ATTR{queue/scheduler}="deadline", ATTR{queue/read_ahead_kb}="256", ATTR{queue/nr_requests}="256"

ACTION=="add|change", KERNEL=="sd[a-z]", ATTR{queue/rotational}=="0", ATTR{queue/scheduler}="deadline", ATTR{queue/read_ahead_kb}="4096", ATTR{queue/nr_requests}="512"

ACTION=="add|change", KERNEL=="nvme[0-9]n1", ATTR{queue/read_ahead_kb}="4096"

Zpool Status

pool: vol0

state: ONLINE

scan: scrub repaired 0B in 6h43m with 0 errors on Mon Jan 13 06:22:53 2020

config:

NAME STATE READ WRITE CKSUM

vol0 ONLINE 0 0 0

raidz3-0 ONLINE 0 0 0

wwn-0x5000c500b37d1d7c ONLINE 0 0 0

wwn-0x5000c500b2769d77 ONLINE 0 0 0

wwn-0x5000c500b26e6c80 ONLINE 0 0 0

wwn-0x5000c500b152e4a2 ONLINE 0 0 0

wwn-0x5000c500b26e22b6 ONLINE 0 0 0

wwn-0x5000c500b26d8443 ONLINE 0 0 0

wwn-0x5000c500b4f69bcb ONLINE 0 0 0

wwn-0x5000c500b697cd6a ONLINE 0 0 0

wwn-0x5000c500b4f9a95e ONLINE 0 0 0

wwn-0x5000c500b697219e ONLINE 0 0 0

wwn-0x5000c500c3b3928d ONLINE 0 0 0

cache

nvme-eui.0025385881b01bc5 ONLINE 0 0 0

nvme-eui.0025385881b01ba5 ONLINE 0 0 0

nvme-eui.0025385b9150d35a ONLINE 0 0 0

nvme-eui.0025385391b2a3a6 ONLINE 0 0 0

nvme-eui.0025385391b2a375 ONLINE 0 0 0

nvme-eui.0025385b9150efa6 ONLINE 0 0 0

spares

wwn-0x5000c500b6970b24 AVAIL

errors: No known data errors

Zpool IO Status

capacity operations bandwidth

pool alloc free read write read write

--------------------------- ----- ----- ----- ----- ----- -----

vol0 27.3T 72.7T 1.24K 1.16K 30.3M 29.7M

raidz3 27.3T 72.7T 1.24K 1.16K 30.3M 29.7M

wwn-0x5000c500b37d1d7c - - 116 108 2.75M 2.70M

wwn-0x5000c500b2769d77 - - 120 108 2.74M 2.70M

wwn-0x5000c500b26e6c80 - - 114 108 2.76M 2.70M

wwn-0x5000c500b152e4a2 - - 117 109 2.75M 2.70M

wwn-0x5000c500b26e22b6 - - 115 108 2.76M 2.70M

wwn-0x5000c500b26d8443 - - 114 107 2.76M 2.70M

wwn-0x5000c500b4f69bcb - - 111 108 2.77M 2.70M

wwn-0x5000c500b697cd6a - - 118 108 2.74M 2.70M

wwn-0x5000c500b4f9a95e - - 113 107 2.77M 2.70M

wwn-0x5000c500b697219e - - 115 108 2.76M 2.70M

wwn-0x5000c500c3b3928d - - 114 107 2.76M 2.70M

cache - - - - - -

nvme-eui.0025385881b01bc5 3.27G 951G 0 22 99 2.44M

nvme-eui.0025385881b01ba5 4.82G 949G 0 37 99 3.60M

nvme-eui.0025385b9150d35a 3.58G 928G 0 30 99 2.68M

nvme-eui.0025385391b2a3a6 3.86G 928G 0 29 99 2.87M

nvme-eui.0025385391b2a375 3.81G 928G 0 43 99 2.84M

nvme-eui.0025385b9150efa6 3.25G 928G 0 23 99 2.44M

--------------------------- ----- ----- ----- ----- ----- -----

Cooling and Noise

The Corsair AIO cooler is nice to have. My i7-9800X is overclocked to 4.7GHz all cores boost (using a -6 AVX offset as AVX instructions have an insane power and heat generation profile). Final config as 3 Noctua 120mm fans pulling off the hotswap bays and 2 more pulling on radiator. PCIe slots need a fan due to the LSI 9201-16i running insanely hot. So hot, even at idle, it caused the Mellanox ethernet adapter to throw kernel errors about core temps over 105C!

Idle Temps

$ sensors coretemp-isa-0000 Adapter: ISA adapter i7-9800x: +30.0°C (high = +100.0°C, crit = +110.0°C) Core 0: +29.0°C (high = +100.0°C, crit = +110.0°C) Core 1: +27.0°C (high = +100.0°C, crit = +110.0°C) Core 2: +28.0°C (high = +100.0°C, crit = +110.0°C) Core 3: +29.0°C (high = +100.0°C, crit = +110.0°C) Core 4: +28.0°C (high = +100.0°C, crit = +110.0°C) Core 5: +28.0°C (high = +100.0°C, crit = +110.0°C) Core 6: +30.0°C (high = +100.0°C, crit = +110.0°C) Core 7: +29.0°C (high = +100.0°C, crit = +110.0°C)

Mprime95 Temps

$ sensors coretemp-isa-0000 Adapter: ISA adapter i7-9800x: +75.0°C (high = +100.0°C, crit = +110.0°C) Core 0: +69.0°C (high = +100.0°C, crit = +110.0°C) Core 1: +74.0°C (high = +100.0°C, crit = +110.0°C) Core 2: +72.0°C (high = +100.0°C, crit = +110.0°C) Core 3: +73.0°C (high = +100.0°C, crit = +110.0°C) Core 4: +60.0°C (high = +100.0°C, crit = +110.0°C) Core 5: +75.0°C (high = +100.0°C, crit = +110.0°C) Core 6: +68.0°C (high = +100.0°C, crit = +110.0°C) Core 7: +66.0°C (high = +100.0°C, crit = +110.0°C)

Never breaking 78C is not bad for a 4U chassis and air flow.

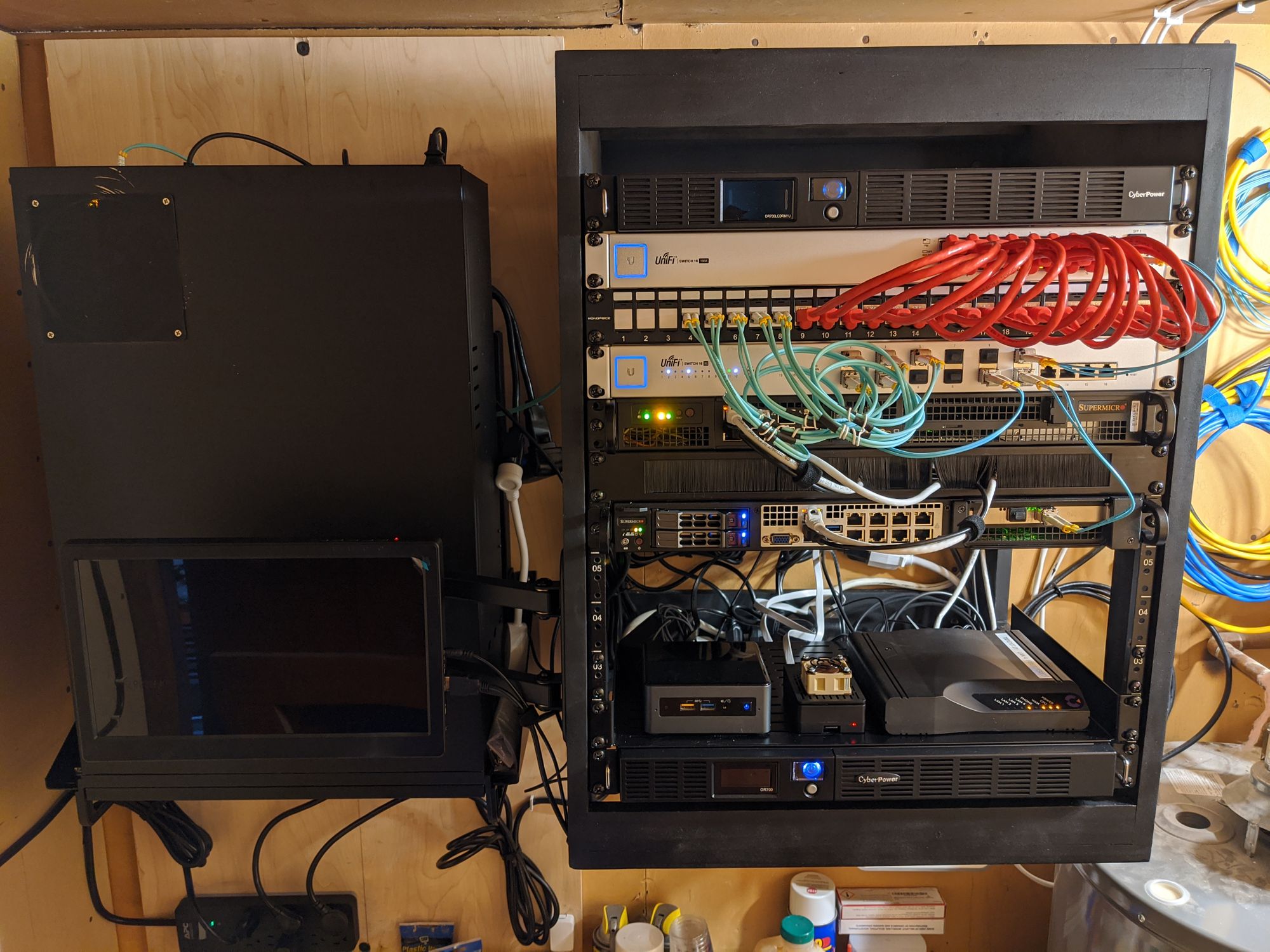

Vertical 6U (upside down)

I have a very narrow utility room in my 1952 ranch-style house, so I needed to vertical mount the server. I used a Startech 6U vertical mount. I also mounted things upside down, given that hot air rises! Using a 1U CyberPower OR1500LCDRM1U Smart App LCD UPS System, 1500VA/900W. I mounted the UPS closest to the wall, then left 1U of empty space and mounted the Rosewill chassis on the outer 4U. The 2-foot by 4-foot, 3/4-inch birch plywood is mounted directly to the wall studs, the Startech 6U rack is mounted to the plywood.

Updated Homelab

Got rid of USG-4 Pro and UDM Pro after upgrading to the UDM Pro and absolutely hating the disaster that is UniFi routing products. Adding second UPS and painted my wooden 12U rack black.

pfSense on Supermicro SuperServer 5019D-FN8TP and Proxmox on a Supermicro SuperServer 1019C-FHTN8 with an Intel Xeon E-2278G CPU.